When A/B Tests Works

A/B tests are most effective when matched to your program’s stage of development and when your organization has the capabilities to design, implement, and learn from rigorous experiments.

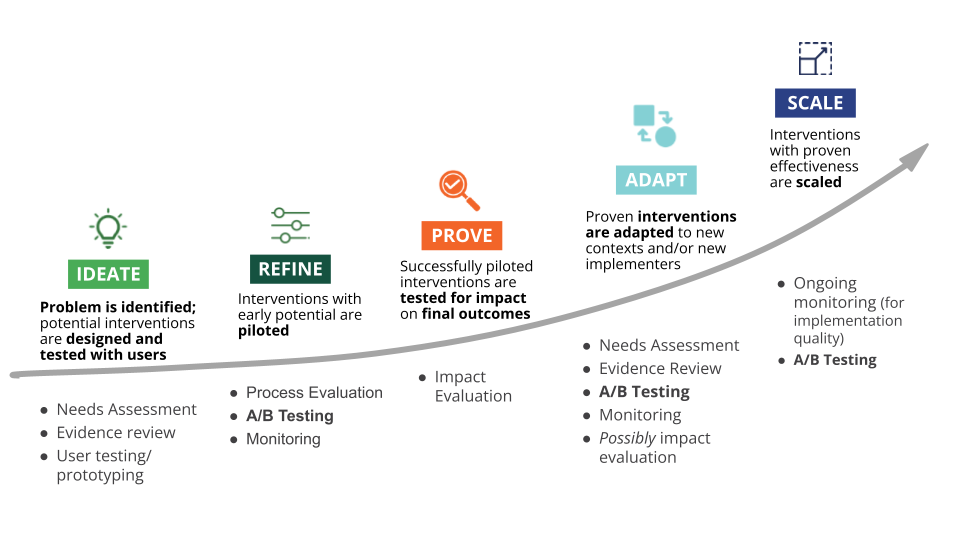

- Use the Stage-Based Learning framework to identify when A/B testing is appropriate

- A/B testing is most valuable at the Refine, Adapt, and Scale stages

- Organizations need specific technical and operational capabilities to implement A/B testing effectively

Deciding When to Use A/B Testing

A/B testing is a powerful method, but it is not the right tool for every situation. Deciding when to do A/B testing requires reflecting on the kind of learning questions a program needs to answer at its current stage of development, and whether the organization’s current capabilities can support experimentation.

When to Do A/B Testing: Stage-Based Learning

To identify when to do A/B testing, IPA’s Right-Fit Evidence Unit proposes an overarching framework that guides learning activities across programs’ stages of development. The Stage-Based Learning framework helps organizations identify their learning needs based on program maturity1. Each stage –Ideate, Refine, Prove, Adapt, and Scale– is associated with particular types of learning needs, and those needs call for different methods that can generate insights that are credible, actionable, cost-effective, and are likely to generate transportable knowledge.

In the early stages of an intervention, the focus of an organization should be on understanding the problem, rapidly testing potential solutions with users, and refining the design. In later stages, the priority shifts to demonstrating impact, adapting to new contexts, and ensuring consistent performance at scale. A/B testing is one of several learning methods, and it is particularly relevant for programs at the Refine, Adapt, and Scale stages of the framework, when organizations face questions about how to improve or adapt specific components of an intervention in a different context or at a greater scale.

Learning Needs at Different Stages

| Stage | Stage identification | Learning focus | Key learning questions |

|---|---|---|---|

| Refine | The intervention’s initial design is complete and its key components have been tested with a small group of users. | Iteratively test the intervention to assess implementation quality and early signs of effectiveness. | • Which delivery channels or engagement strategies are most effective at increasing take-up and/or reach among the target population? • Which components of the intervention are driving changes in early outcomes? • What elements can improve before the intervention is evaluated for impact and/or scaled? |

| Adapt | The intervention has demonstrated positive impact through rigorous impact evaluation and will be implemented in a new context2. | Adapt the model ensuring alignment with challenges and characteristics in the new context, while aiming for efficiency. | • What adaptations are needed for the intervention to be effective and relevant in the new context? • How can the same or similar level of impact be achieved at lower cost? |

| Scale | The intervention has demonstrated positive impact and has been adapted to be implemented with quality at scale. | Ensure that the implementation quality is maintained when reaching more participants. | • Are there more efficient ways to deliver the program at scale maintaining the quality? |

When A/B Testing Supports Learning Goals

In the Refine stage, A/B testing can support learning needs by enabling organizations to systematically test variations3 to the program that could address these questions.

An EdTech organization piloting an AI-enabled teaching assistant may want to test whether adding a classroom feedback feature leads to improved instructional quality. By running an A/B test comparing two groups–one with and one without the feedback feature–and tracking student performance, the organization can assess whether this addition meaningfully enhances outcomes.

In the Adapt stage, A/B testing can support learning goals by comparing different versions of the intervention or delivery model to assess which approach works best in a new context.

A proven student learning platform expanding to a new country might test different tones for its AI-enabled coach to determine which one is most effective at engaging students.

In the Scale stage, A/B testing can support learning goals by testing adaptations to a delivery model to determine which approach is more cost-effective at scale.

An EdTech program scaling nationwide might compare SMS reminders (costly) versus in-app notifications to see which drives higher student retention at lower cost.

The insights gained from A/B testing help organizations strengthen interventions based on rigorous evidence from real-world implementation before making larger investments in an impact evaluation or afterwards to keep adapting the intervention at scale. A/B testing can be a very useful methodology for refining and adapting programs, but its potential depends on organizations’ ability to design credible experiments, interpret results accurately and act on them. This often limits or restricts its use, so our approach consists of developing an A/B testing system that builds the capabilities necessary to test and refine continuously.

When an A/B Testing System is a Good Fit for a Tech-Forward Organization

Selecting methodologies that align with the organization’s existing capabilities is crucial to balance the learning-to-cost ratio. This means focusing on the most useful data for the lowest possible cost. While A/B testing can offer powerful insights, it is a tool more appropriate for organizations that have, or aim to build, the capabilities needed to design, implement, and learn from rigorous experiments. These include both technical and operational requirements that ensure the tests are feasible and the results are reliable. Section 3 provides a step-by-step process on how to develop these capabilities as organizations build and embed an A/B testing system within their MEL toolkit.

Drawing on experience developing A/B testing systems with organizations, we have found that strengthening the following capabilities is especially important:

Program Conditions

Clarity on learning goals

- What the organization wants to learn with the A/B test (e.g. improving uptake or retention rates, increasing meaningful engagement, or reducing costs)

- Quick rule-of-thumb: If you can state the decision you’ll make under each possible result before launching, you have clear learning goals. If not, you likely need discovery research first (needs assessment, prototyping, user research)

Clear implementation plans

- A/B testing can only be done while an intervention is running or a product is live

- If the product isn’t live yet, do user tests or prototyping first

Stable and sufficiently large user base

- To support rigorous comparisons

- If power calculations do not support statistical rigor, prioritize qualitative methods

Systems and Tools

Reliable data systems

- To track relevant outcomes, such as user interaction with the platform, impact-related metrics, and experiment performance indicators

Experimentation tools or software

- Developed internally or integrated from a third party

- Allow for random assignment and the management of multiple tests

Technical Capacity

Technology capacity

- To build or set-up the infrastructure for experimentation

- Including data workflows, tool integration, and the delivery of test variations in live environments

Analytical capacity

- A person in the team that knows both the program and the technological infrastructure

- Can allocate time to design valid experiments, interpret statistical results, and turn findings into actionable recommendations

Building Capacity Over Time

Organizations do not need to master all these capabilities at once. Many can start with other low-cost learning methods to build capacity over time, using early experiences as opportunities to strengthen data practices and foster a culture of learning. These early steps help teams build capacities and confidence in using evidence for decision-making, laying the groundwork for more structured experimentation.

When Not to Run A/B Experiments, and What to Do Instead

When analytical tools and know-how are not available:

- Explore using other methods such as user research, prototyping, or simple analog tests

- Start building analytical and data capabilities

When the user base is small or volatile:

- Underpowered results won’t be credible

- Do usability tests, interviews, focus groups or small pilots instead

When measuring long-term outcomes:

- For effects on long-term outcomes (e.g. changes in income)

- Consider an RCT to assess impact on final outcomes

When high interference/spillovers expected:

- Between users

- Consider cluster-level tests or use system-level methods

When outcomes are highly predictable or stakes are trivial:

- Try the obvious change and track performance with strong monitoring

The next section outlines a step-by-step approach for building an A/B testing system. The approach illustrates how key capabilities can be developed over time in a way that aligns with an organization’s stage of readiness. While designed to support teams starting from scratch, the process can also be adapted by organizations that already have some capabilities in place.

References

IPA Right-Fit Evidence Unit. (2024, October). Enabling stage-based learning: A funder’s guide to maximize impact. https://poverty-action.org/sites/default/files/2024-10/Enabling-Stage-Based-Learning-Full-Guide.pdf

Gugerty, M. K., & Karlan, D. (2018). The Goldilocks challenge: Right-fit evidence for the social sector. Oxford University Press. https://doi.org/10.1093/oso/9780199366088.001.0001

IPA Right-Fit Evidence Unit. (2024, October). Enabling stage-based learning: A funder’s guide to maximize impact. https://poverty-action.org/sites/default/files/2024-10/Enabling-Stage-Based-Learning-Full-Guide.pdf↩︎

This often involves delivering the intervention in new geographies, to new populations, at a later time, and/or through new partners.↩︎

A recent thought piece by IDinsight highlights an important pitfall: the risk of launching A/B tests before giving enough time to work out the kinks of new program designs. To avoid this, it is essential to ensure that variations to be tested are grounded in evidence or user research, as discussed in the next section. Additionally, when testing major components of a program, it is good practice to pilot them first to confirm they work as intended. This helps ensure that resources are not spent testing a variation that is clearly ineffective or infeasible to implement.↩︎