Data Cleaning

Best practices for data cleaning, focusing on manipulating raw data using Stata. Covers the essential steps from import to creating analysis-ready datasets.

Proper data cleaning is essential for analytical accuracy. Raw data requires modification before analysis. These modifications constitute cleaning. The cleaning process should always be reproducible, well documented, and defensive; the code should tell the user if the data is not as expected.

The IPA/GPRL Data Cleaning Guide presents a set of common tasks and provides information on “what” each step is and “how” to complete each step in Stata. The guide also flags potential roadblocks during the data cleaning process. This guide assumes basic knowledge of Stata; introductory Stata training is available through IPA’s training repository.

This guide covers other parts of the data flow, including coding best practices, deidentification, and version control. In many ways these topics are distinct from data cleaning, but all interrelate to some extent. Effective data cleaning follows coding best practices, uses a version control system, and includes thorough documentation.

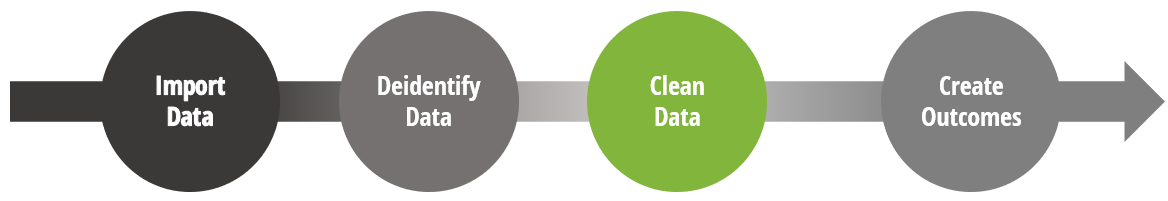

Data flow

At a high level, data transitions from a format that reflects the collection structure to a format suitable for analysis. This transition occurs when data moves from generation—in surveys or automated banking systems—to analysis-ready formats. The contents of the data do not change during this process, but the storage, aggregation, and labeling formats do change.

This entire process constitutes a data flow. At GPRL and IPA, the data flow includes four key steps that take place in statistical software. Differences in the data may make it impossible to follow this order exactly. Deidentification should happen as soon as possible in the data flow if the data contains PII:

- Import data: Combine all collected data into a format readable by statistical software. This step imports raw data, applies corrections from enumerators, and removes duplicate observations.

- Deidentify data: Remove personally identifying information—PII. This includes all individually identifying PII—geographic information, names, addresses, enumerator comments, etc.—as well as group identifying information such as a combination of village and birthdate.

- Clean data: Standardize data content, formats, and encoding. After this, verify data consistency and append similar datasets to create single datasets for outcome creation.

- Create outcomes: Create individual outcome variables from the clean data. Merge and append data as part of this process to make a dataset at the level of analysis needed.

Data cleaning

Analysts cannot use raw data directly for analysis. Individual survey items usually lack sufficient information on their own. Researchers must create outcome variables from standardized sets of variables. Additionally, analysts must add documentation so data users understand what each dataset contains.

Raw data often needs corrections and deduplication that requires additional data from enumerators or respondents. Data collection for replacement forms part of the data collection process. Researchers often collect and make these replacements during monitoring. IPA and GPRL have produced many tools and resources to help this process. In particular, IPA’s Data Management System supports data quality monitoring, duplicate management, and corrections.

After data reaches an importable format, the raw data will have its own idiosyncrasies. The cleaning process attempts to standardize these idiosyncrasies in a reproducible way. Consider three surveys, each with slightly different outputs. Cleaning makes the output from those datasets equivalent in format. The process applies standardized modifications to the content. The code that produces those data should run any number of times and should tell the user if something about the data has changed so that it cannot accomplish its function.

The cleaning process includes four rough stages:

- Raw Survey Data Management

- Variable Management

- Dataset, Value, and Variable Documentation

- Data Aggregation

Each stage has a description in the guide, as well as a list of tasks with dedicated subpages. This guide also covers Stata coding practices relevant to this process and tasks related to outcome creation that require data management and are particularly prone to error in Stata.