What is A/B Testing?

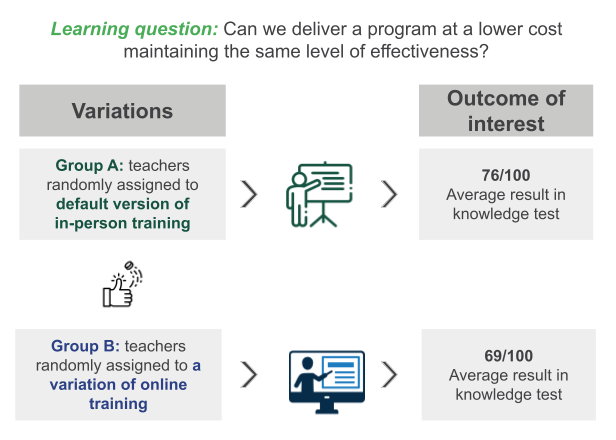

A/B testing is a rigorous experimentation method that compares variations of a program or product to determine which produces greater effects on specific outcomes, enabling rapid yet rigorous learning for social impact organizations.

- A/B testing enables rapid, iterative learning through systematic comparison of program variations

- It differs from traditional RCTs by focusing on short-term, actionable metrics using existing monitoring systems

- Building an A/B testing system helps organizations embed experimentation into regular workflows

This guide is a living product that is written and maintained publicly. We welcome feedback and corrections to improve this guide. If you would like to contribute, please reach out to rightfit@poverty-action.org, researchsupport@poverty-action.org or see the Contributing page.

A/B Testing vs. Randomized Controlled Trials

A/B testing falls under the broader umbrella of randomized experiments, since it relies on random assignment across conditions to generate causal evidence. In this resource, however, we distinguish them more narrowly: we mention randomized controlled trials (RCTs) to refer to studies where an intervention is compared to the absence of the intervention (pure control group) and is typically focused on long-term, final outcomes (e.g., income, learning gains). On the other hand, we use “A/B testing” to describe rapid experiments that usually rely on administrative or easily accessible monitoring and engagement data.

Both methodologies can be used for learning and evaluation purposes, but their strengths differ. A/B testing is very valuable for continuously learning and refining throughout the program implementation, answering the question “Which version works more effectively, efficiently, or scalably?” While RCTs are most often used as a summative assessment that answers the question “Does the program work?”

The distinction we emphasize in this resource is practical: A/B testing can continuously generate rapid, actionable evidence for internal, short-term decision-making, whereas RCTs usually focus on assessing overall program impact with an external evaluator and a long-run lens.

Why A/B Testing Matters for Organizations

This practical distinction is important because A/B testing’s potential for rapid, iterative learning is a major reason why organizations should seek to internalize this capacity. Many organizations already have operational and MEL data systems, yet translating these data into actionable insights that lead to decision-making for program refinement remains a challenge. For this reason, building an A/B testing system can help organizations embed experimentation into regular workflows, supporting continuous innovation and program refinement.

This page provides practical guidance for tech-forward organizations looking to build an A/B testing system. It begins by discussing when this methodology is an appropriate method for learning to then outline key organizational capabilities for the effective adoption of an A/B testing system. The next section introduces the Learning Roadmap for A/B testing, a structured approach developed by Innovations for Poverty Action’s (IPA) Right-Fit Evidence Unit (RFE) to help organizations design, prioritize, and implement A/B tests that are both rigorous and aligned with program cost-effectiveness goals. This approach supports organizations in building the processes and technology infrastructure needed to adopt A/B testing. Many of the examples used throughout the page are inspired by the RFE unit’s advisory engagements with partner organizations. The final section shares practical lessons that have emerged from the advisory engagements supporting partners in adopting A/B testing and refining their educational technology (EdTech) interventions.

References

Angrist, N., Beatty, A., Cullen, C., & Matsheng, M. (2024). A/B testing in education: Rapid experimentation to optimise programme cost-effectiveness. What Works Hub for Global Education. Insight Note 2024/001. https://doi.org/10.35489/BSGWhatWorksHubforGlobalEducation-RI_2024/001